Bulk Inserts: MongoDB vs CouchDB vs ArangoDB (Dec 2014)

More than two years ago, we compared the bulk insert performance of ArangoDB, CouchDB and MongoDB in a blog post.

The original blog post dates back to the times of ArangoDB 1.1-alpha. We have been asked several times to re-run the tests with the current versions of the databases. So here we go.

Test setup

We have again used the PHP bulk insert benchmark tool to generate results for MongoDB, CouchDB, and ArangoDB. The benchmark tool uses the HTTP bulk documents APIs for CouchDB and ArangoDB, and the binary protocol for MongoDB (as MongoDB does not have an HTTP bulk API). The benchmark tool was run on the same machine as the database servers so network latency can be ruled out as an influence factor. No replication or sharding were used.

The test machine specifications are:

- Linux Kernel, cfq scheduler

- 64 bit OS

- 8x Intel(R) Core(TM) i7 CPU, 2.67 GHz

- 12 GB total RAM

- SATA II hard drive (7.200 RPM, 32 MB cache)

The total “net insert time” is reported for several datasets in the following charts. This is the time spent in the benchmark tool for sending to request to the database and waiting for the database response, i.e. excluding the time needed to create the chunks that are sent to the database.

The database versions used in the tests were the current stable versions:

- MongoDB 2.6.6, with defaults

- CouchDB 1.6.1, with defaults (i.e. delayed_commits), but without compression

- ArangoDB 2.3.3, with defaults (i.e. waitForSync=false)

Different artificial and real-words datasets with different sizes were imported. The datasets are all included in the benchmark tool’s repository.

Test results

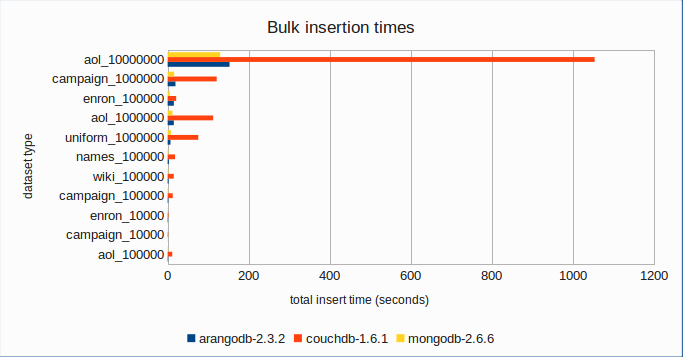

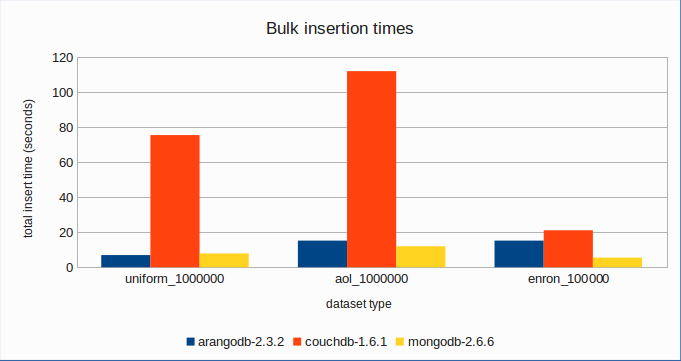

Here’s an overview of the results containing most datasets in a single chart:

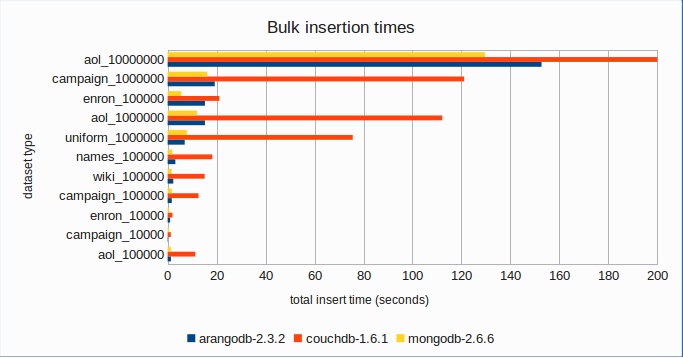

Here’s a closer look, with the x-axis scale limited to 0 – 200 to eliminate the CouchDB outlier:

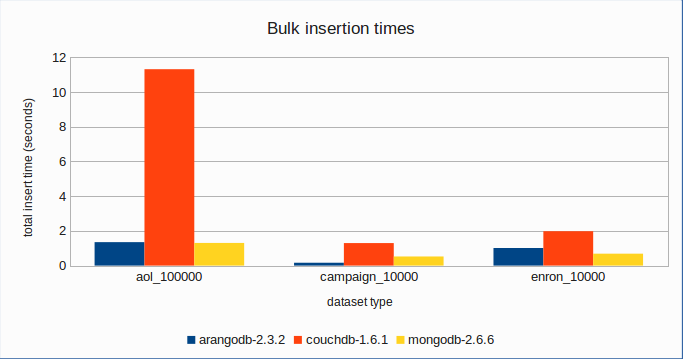

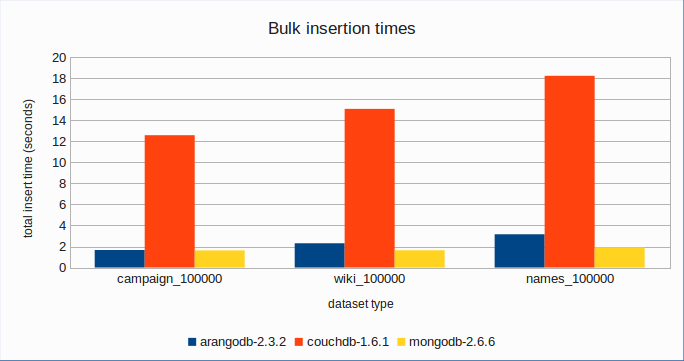

The following charts each contain a few datasets only, providing a better detail view:

Conclusion

As can be seen from the results, the insertion times for ArangoDB and MongoDB are still in the same ballpark. The changes are minimal compared to the results of the 2012 edition of this test. ArangoDB is still a bit slower than MongoDB for most of the tests, but the difference is still not great. This is good to see. Several features have been added to ArangoDB since 2012 which could have had a potential negative impact on the performance (transactions, write-ahead log, replication, sharding – to name only the most important). It looks like adding these features hasn’t messed up the performance.

And CouchDB is still trailing. The insertion times for CouchDB are still significantly higher than the ones for ArangoDB and MongoDB.

Caveats

These are benchmarks for specific datasets. The dataset volumes and types might or might not be realistic, depending on what you plan to do with a database. Results might look completely different for other datasets or for other test clients.

In addition, the benchmarks compare the HTTP API of CouchDB and ArangoDB against the binary protocol of MongoDB, which gives MongoDB a slight efficiency advantage. However, real-world applications will also use Mongo’s binary protocol so this is an advantage that MongoDB does have in real life (though it comes with the disadvantage that the protocol is not human-readable). Furthermore, there are of course other aspects that would deserve observation, e.g. CPU and memory usage. These aspects haven’t been looked at in this post. So please be sure to run your own tests in your own environment before adopting the results.

We have even more performance tests between various databases.

7 Comments

Leave a Comment

Get the latest tutorials, blog posts and news:

Would be interesting to see a compare, with random reads and writes, and more interestingly, durable writes.

I only learned of ArangoDB today. I’m currently a MongoDB user, which has replaced many years of primarily using MySQL. Based on the above performance, it looks like there’s no huge advantage for me to adopt Arango, but the query language is definitely interesting. I’ll be keeping my eyes on this project in case it brings an advantage to the table that Mongo doesn’t have. Good work folks.

Thank you 🙂

If you have any questions regarding ArangoDB don’t hesitate to ask us on googlegroups or github

Only to name a few: joins, graphs, transactions, query language, http api, data compression (to be added on Mongo 3.0 I’ve heard), mvcc. But my benchmarks show greater differences then the numbers above (MongoDB being faster), and MongoDB has GridFS (which I like), and better sharding support. Oh well. Can’t be happy on *everything*.

Can you share with more information about your benchmarks? Would be great. Just send me an email to claudius@arangodb.com

MongoDB 3.0 is out and comes with a new storage engine, I think it’s a good time to benchmark ArangoDB 2.6 against it!

We will definitely do this.