Building Our Managed Service on Kubernetes: ArangoDB Insights

Running distributed databases on-prem or in the cloud is always a challenge. Over the past years, we have invested a lot to make cluster deployments as simple as possible, both on traditional (virtual) machines (using the ArangoDB Starter) as well as on modern orchestration systems such as Kubernetes (using Kube-ArangoDB).

However, as long as teams have to run databases themselves, the burden of deploying, securing, monitoring, maintaining & upgrading can only be reduced to a certain extent but not avoided.

For this reason, we built ArangoDB ArangoGraph.

ArangoDB ArangoGraph is a managed service for running ArangoDB databases in the cloud. It provides our customers with a fast and easy way to use a fully functional and very secure ArangoDB cluster in the cloud. All operational aspects are covered by us so that you can entirely focus on your application.

At the time of writing, ArangoDB ArangoGraph (short ArangoGraph) is in pre-release for about two months, and general availability is approaching fast. The planned release is in November 2019.

This blog post is the story of how we built ArangoGraph using Kubernetes and what we have learned while building a managed cloud on many different cloud providers.

Basic architecture

ArangoGraph is an API driven framework that is roughly split into two parts.

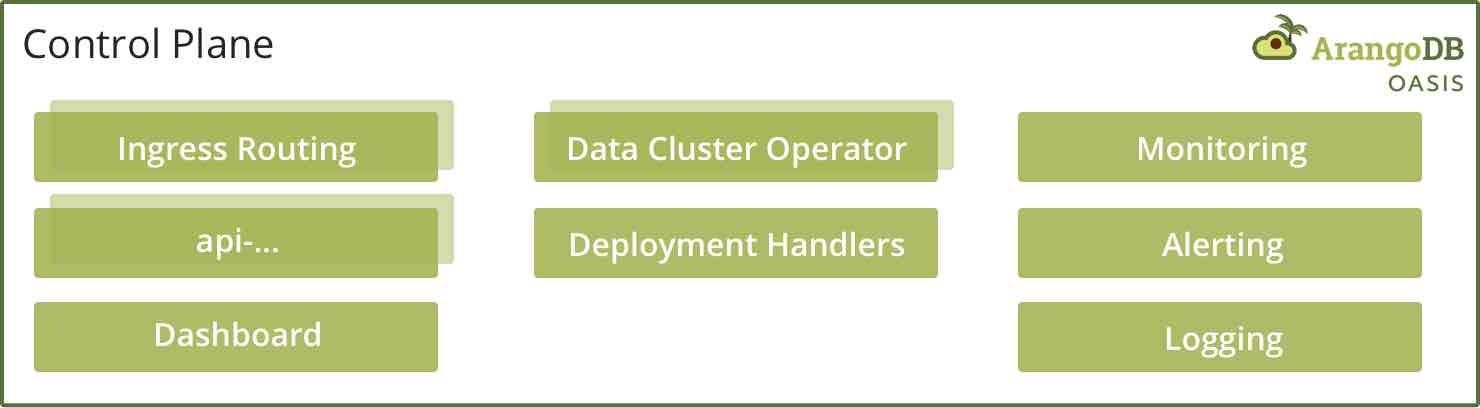

The first part is our “control plane.” That is where all our APIs are implemented, where our dashboard is served from, and where our system-wide monitoring stack lives.

The second part is called the “data cluster.”

There are many data clusters, one for each region on each cloud provider. That is where the ArangoDB clusters (we call them deployments) of our customers are running.

Both parts of the framework are orchestrated using Kubernetes. Inside the Kubernetes cluster that orchestrates our control plane, we run over 30 different small services that all focus on a small part of the overall API. For example, there is a service that controls identity & access control management; there are services to send emails, deal with DNS domain records, and so on.

It also houses a few specialized services that create & manage data clusters. These services (we call them data-cluster-operators) build, maintain and remove data clusters for a specific cloud. All of this is done in code; there is no ansible, puppet, terraform, etc., involved. Put everything together, and you’ll get a fully automated, scalable platform.

The data clusters are also (managed) Kubernetes clusters. Inside these data clusters, we have a small set of services running that are responsible for deploying, maintaining, monitoring, and removing ArangoDB deployments. But the majority of resources of the data clusters are occupied by ArangoDB clusters.

Cluster != Cluster

Note that we use the term “cluster” a lot, but with different means.

- “Kubernetes cluster.” A cluster of nodes that run pods (see kubernetes.io)

- “ArangoDB cluster.” A cluster of ArangoDB nodes that form a highly available database.

- “Data cluster.” A special kind of Kubernetes cluster that we use to run ArangoDB deployments (“ArangoDB clusters”) for our customers.

Kubernetes as abstraction layer

As shown in the previous paragraphs, we are a heavy user of Kubernetes.

The declarative, self-healing nature of Kubernetes makes it an ideal layer on top of which to build our framework. You can compare the promise of Kubernetes to that of the Java Virtual Machine in the nineties. It abstracts away a lot of problems with which the builders of these frameworks have to deal with. That is great! But it does not solve all of the issues.

Our goal was (and is) to offer all features to our customers, irrespective of which cloud provider they want to run their deployment in. To accomplish that, we build & configure our data clusters such that once such a data cluster is fully configured, the layers on top of it does not have to care which cloud platform it is running on.

There are lots of features that work like this out of the box. Configuring load-balancers for the endpoints needed to reach the running deployments is the same on all clouds. That does not mean that it is the same. For example, the load-balancer on AWS gives you a DNS name where the same load-balancer on GCP gives you an IP address. We abstract that away to our customers by mapping these to a DNS entry using either a CNAME record (on AWS) or an A record (on GCP).

Abstracting creation of Kubernetes clusters

But the challenges start to become bigger in our data-cluster-operators. Remember that these are responsible for creating Kubernetes clusters, not using them. When you are only using Kubernetes, it means that you have well known, well-documented APIs at your disposal. When you are creating Kubernetes clusters, it involves a very different set of APIs that are designed at completely different levels of abstraction.

On GCP, we make use of GKE (Google Kubernetes Engine). GKE has a well designed, high-level interface. The primary entities we have to work with are “Cluster” and “NodePool.” These APIs are relatively straightforward, and creating a Kubernetes cluster with this API is a simple task. As we noticed further down the road, the simplicity of the API does hide some annoying semantics, such as restrictions on creating clusters in parallel.

Using these straight forward API’s we had our data-cluster-operator for GCP fully functional in a matter of days.

On AWS, we make use of EKS (Elastic Kubernetes Service). It turned out that this API is lightyears away from the simplicity of GKE’s APIs. Creating an EKS cluster involves dealing with all kinds of low-level entities such are SecurityGroups, NetworkInterfaces, IP addresses, and the list goes on and on.

Once your EKS cluster seems running, you find that you still need to add worker nodes, and we have to auto-scale those. That involves running the Kubernetes cluster-autoscaler. That is something that on GKE is hidden behind a single boolean.

Even more complex than creating an EKS cluster is removing one. Because there are lots of dependencies between the low-level entities. You cannot just remove the cluster with a single API call. Instead, you have to carefully un-attach, remove, and often wait in between to remove all traces of the cluster.

Separating deployments

Given that we run many deployments for different customers in the same data cluster, it is vital to separate these deployments from each other completely.

It is even more critical since ArangoDB has an excellent feature for data-centric microservices called Foxx services. Foxx lets you run JavaScript services in the process of the database. That yields all kinds of significant use cases for developers, but unfortunately, it also puts even more emphasis on shielding-off deployments. Not only from each other but also from the underlying infrastructure and access to the internet. It is not something that can be achieved using standard Kubernetes APIs or resources.

To accomplish the separation we want, we make use of a great project called Cilium. Cilium adds identity-aware network security to Kubernetes pods (and other orchestration environments) using a technology called BPF. BPF is a relatively new Linux kernel technology that allows for advanced network filtering & access control rules deep down in the Linux kernel. The use of BPF in Cilum results in blazing fast network filtering that is easy to configure and maintain.

Luckily for us, the Cilium project has already done all the hard work to ensure that their technology works on all major cloud platforms. All we had to do is construct fine-grained network rules to achieve our desired separation.

The identity awareness allows us to, for example, specify that Prometheus (used for monitoring) is allowed to scrape servers in a customer deployment, while at the same time, these servers have no access whatsoever to Prometheus.

The resulting set of rules results in a sandbox-like environment for each deployment. From the viewpoint of the deployment, it is not possible to make a distinction between our sandbox and a single-tenant environment.

What’s next

In this article, we’ve only scratched the surface of what it takes to build a managed service like ArangoDB ArangoGraph. We will dive into more aspects of the ArangoGraph platform in future articles and our experiences using Kubernetes for it.

In the meantime, feel free to take ArangoGraph for a spin or join the bi-weekly newsletter (in the footer below) to get the latest news about ArangoGraph.

Stay tuned for our GA launch of ArangoGraph in November 2019 🙂

2 Comments

Leave a Comment

Get the latest tutorials, blog posts and news:

Does ArangoDB Oasis support local SSD storage? If not, will it be supported in the future?

Thanks.

Hi Ken,

Please get in touch with us via our contact form, so we can better understand your requirements.

Right now all volumes are SSD but not locally attached. Local attached SSD support is considered, but there is no ETA for this yet.

Thanks,

Ewout